In recent years, one of the most discussed issues is the regulation of artificial intelligence, if so, how? On the one hand, to promote the development of Artificial Intelligence technologies to achieve vital goals for society, and on the other hand, these technologies should not infringe on fundamental human rights and freedoms. In April 2021, the European Commission published the very first draft Regulation on Artificial Intelligence (AI), it is expected that by the end of 2022 the Regulation will be adopted with all corrections and changes, and by 2024 the provisions of this Regulation should be implemented.

In this blog, we will try to summarize the key elements of the proposed Regulation, focusing mainly on the scope of the Regulation and the main challenges, which will be imposed by the adoption of several requirements by the Regulation.

So, the main question is, what should be expected from this intended Regulation?

First of all, the Regulation is intended to regulate not Artificial Intelligence itself, but the systems of Artificial Intelligence.

The Regulation broadly defines “AI systems” and imposes specific obligations on participants in various parts of the AI chain, from suppliers to manufacturers, importers, distributors and users of AI systems.

Another important issue foreseen by the regulation is the steps taken to establish a European Council on AI to oversee and coordinate the use of AI systems.

The Regulation defines AI systems as a “software that is developed with one or more of the techniques and approaches and can, for a given set of human-defined objectives, generate outputs such as content, predictions, recommendations, or decisions influencing the environments they interact with”.

It aims to create a wide network covering not only artificial intelligence systems offered as software products but also products and services that are directly or indirectly based on artificial intelligence services.

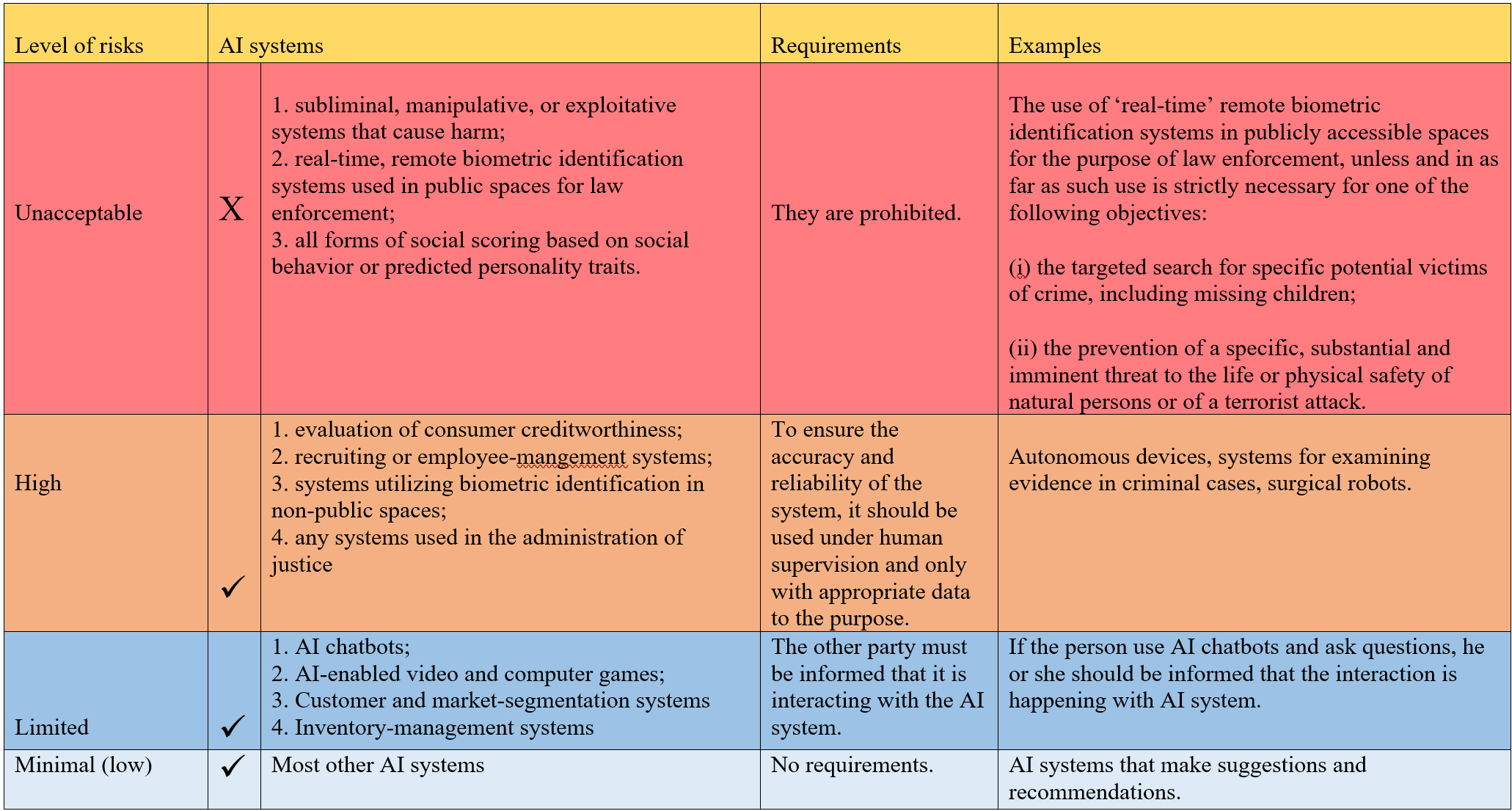

Another innovation introduced to the Regulation is the risk classification of artificial intelligence systems. The table below shows the risk classes defined in the Regulation.

Artificial intelligence systems were classified into 4 risk groups: 1) unacceptable; 2) high; 3) limited; 4) minimal (low).

The Draft EU AI Regulation place several requirements on organizations providing or using high-risk AI systems.:

- Implementation of a risk-management system;

- Data governance and management;

- Human oversight;

- Accuracy, robustness, and cybersecurity

- Conformity assessment.

Fines:

We see that the penalties imposed by the Regulation for non-compliance are more onerous than those provided for in the GDPR. For using unacceptable risk levels of AI systems, a fine of up to 30 million euros will be imposed.

Shortcomings and criticism of the Regulation:

1) Definition of AI:

In our opinion, the crucial problem in this Regulation is the definition of AI and because of this problem, all others are coming. The current definition of AI systems is too technical and broad. The lawyers and critics advocated for using a definition based on properties or effects, rather than techniques. One more problem related to the definition is all systems that we use can be considered AI, which seems not the right approach and for this reason, it can be predicted that in the final version of Regulation the definition of AI would be more concrete and precise.

2) Overregulation of AI and stifling innovation?

We can state that proposed requirements can be assessed as “overregulation” and present major barriers for small and medium companies to develop AI systems innovation. The concern is that SMEs will struggle to conform to the compliance assessments based on these standards. The draft regulation is “company-centric” not “human-centric”. The fundamental rights concerns, and the concerns about what AI technologies could do to our society, and especially to more vulnerable population groups, are not addressed.

The biggest problem, in our opinion, is the uncertainty with the concept of Artificial Intelligence in general. Is it possible to consider artificial intelligence as an object of law when it can solve tasks and problems without the presence of a person, or a subject of law when it is not endowed with those natural qualities of a person and cannot be held responsible for its offences? This question remains open, but what if artificial intelligence is given a special category in law, like an object in a subject?

The question is rhetorical, and at the moment, it is difficult to answer. Because at the moment, the question of what is considered artificial intelligence and what is not is still unclear.